The big thing about neural-style is being able to separate style and content of an image, and to synthesize new images mixing content and style from different sources. The original paper describing this new technology demonstrated how it is possible to apply the style of famous paintings from Van Gogh, Kandinsky and Munch to photographs.

When I found neural-style, I wasn’t, however, looking for a means to imitate famous paintings. I had been experimenting with Processing for artistic goals, and was looking for ideas how to process images into something far less detailed, into areas of color and light, perhaps.

I now conducted a set a experiments with neural-style using only the lower layers (conv1_1, conv1_2 and conv2_1) to control content and style. I found that in this way, I could filter out details. Also, the process was no longer imitating the style of an image, except in a very general manner, in the use of colors and perhaps in some larger shapes.

So I set out from this photo, taken a couple of weeks ago in Kaivopuisto park, Helsinki, Finland.

This video shows in two minutes examples of new images created in this way. The longer animations are done by picking images from the iteration series when neural-style is still working to improve the picture to satisfy the content and style requirements. Most people seem to think that reaching adequate quality takes around 2000 iterations. That is also true in many cases, especially when trying to create pictures with much detail. However, I find that the process of how neural-style gradually modifies the image can often be even more interesting than the final result. The examples here are usually taken between 100 to 300 iterations.

Please note that I did not use anything by Edvard Munch for these images. The night views were created by using a photo taken by myself (see below). Also, often what look like stylistic features are actually passing phases in the iteration series.

In the following pictures, I actually did use a famous painter as a style model, namely a blue and white cutout by Matisse. However, the resulting images bear no resemblance to the style model, except perhaps in the sparse use of colors. The blurred look derives from the use of only the lowest, low resolution layers in the neural net.

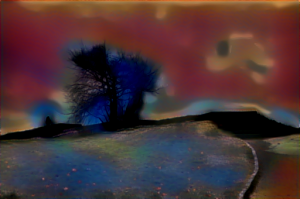

To create some night views, I used this photo taken by myself.

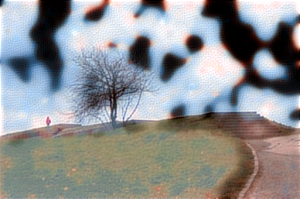

And the results…

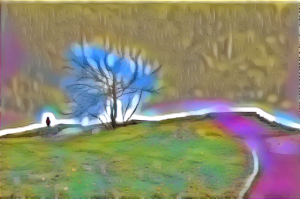

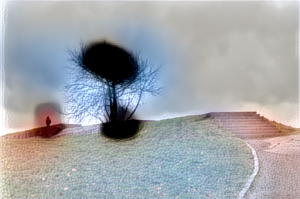

The following, brighter views, were created using the content image itself as the style model. Still, sticking to the use of the lower resolution layers, the look is nowhere near the photograph. The first image is from around 50 iterations, after which the look becomes smoother and finally after 200 iterations the sky loses much of its color but simultaneously the ground obtains a photorealistic texture.

In the following examples I used a picture of a portrait done with a palette knife. Still, the picture shows no trace of the palette knife. It is only the greyish hues that have influenced the picture.

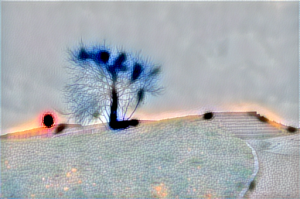

I am not really fully sure what I did to create these. Where do the black shapes come from? Somehow from the style image, perhaps, which is the same as in the previous series. What is also interesting here is that in the iteration series, the dark shapes first appear in the sky, then gradually recede and disappear, while the ground becomes covered with a shadow.

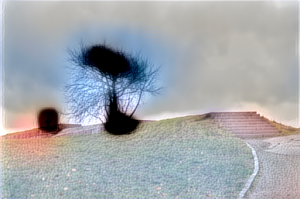

Finally, one of the very first tests in this series. I don’t really know what I did, but I liked the blurred look so much that I got carried away into experimenting more.