New innovative GAN implementations are published every week. A particularly interesting one is CycleGAN, which promises to learn to do transformations, similar to what pix2pix does, but without needing pairs of corresponding images. In other words, it claims to be able to transform horses to zebras using a training set consisting of a) any horses and b) any zebras. Also it also appears to manage transform a painting by Monet into a photo image.

As the task of assembling a dataset of matching image pairs is often huge, being able to compose the datasets more freely feels a great advance. I was tempted to try what it can achieve if given a set of modern paintings of greatly varying style. So I assembled a set of 2000+ images of modern works of art, mostly but not exclusively paintings, and trained a CycleGAN using my own landscape photos as the other set, with the goal of seeing what this GAN would make of my photos. Will it make them look like art, and if so, in what style.

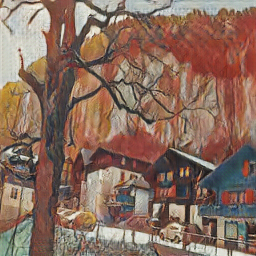

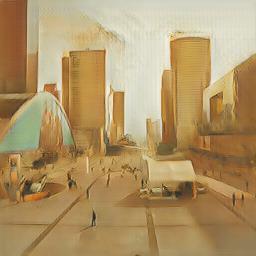

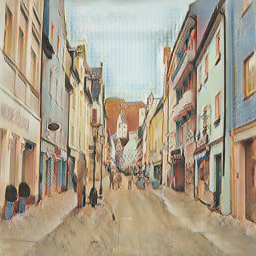

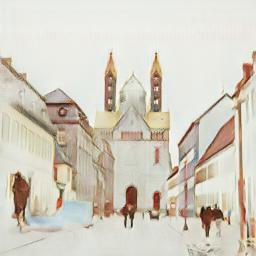

The results are interesting, and many of them have the look of works of art. Moreover, the style varies from image to image. One is tempted to think that the GAN has succeeded to match each image to those paintings which are most suitable as style models. Yet I am hesitant to make such a claim.

The results as a whole do not look so modern as the dataset did. In particular, the abstract works seem to have had no visible effect. This is understandable, because even the authors of CycleGAN write that it performs well with transformations of color and texture, but fails when expected to transform larger structures (such as shape, I assume). This appears to be a general handicap with neural network based image transforms.

Would it be possible to post the original images alongside the transformed ones?

Interesting work nonetheless.