I have noticed that I prefer the original neural-style over the faster variants like texture-nets and fast-neural-style. One reason is that neural-style allows more control and more immediate feedback when working on images; this applies when one is not interested in developing reusable filters but working a individual images and developing new styles.

A perhaps even more useful aspect of neural-style is that the code, once has become familiar with it, one is not limited to the readymade features of the software as is. Neural-style is a very good basis for experimenting with modifications and new ideas. This thread is a good demonstration of how one can use the code as a basis for further explorations.

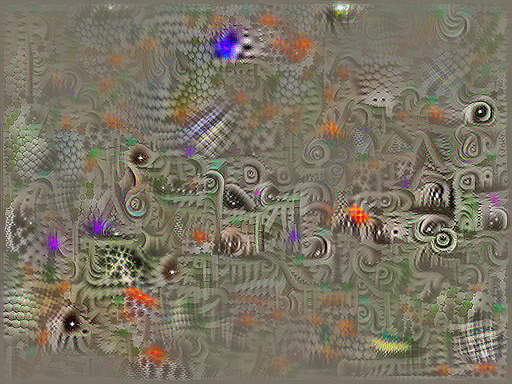

Recently, I started wondering how style would be affected if one tampers with the Gram matrix, which is now understood to give a statistical representation of a style. I made a quick test in which I replaced the Gram matrix by a fully random matrix. I was not surprised that the results were not very promising. The iteration process did not always proceed very well. Produced images were often quite bleak. Yet, after some level adjustment, some images produced were simply amazing.

This was promising enough. I am usually not at all interested in deep-dream style images, but here the fantasy elements are somehow at the same time intriguing and balanced.

I then started to look deeper into what a Gram matrix contains, and tried to make heuristical rules to generate random matrices more like the representations of real styles. I found out that a Gram matrix is always symmetrical. Then I noticed that in practice there appear to be a few rows and columns with much smaller that average values. But this was not leading much further.

Finally I had to turn to the mathematics… I have studied matrices and linear algebra at the technical university, but was decades ago. Anyway, my next idea was to decompose the Gram matrix into eigenvalues and eigenvectors, modify the eigenvectors and then reconstruct a modified style matrix.

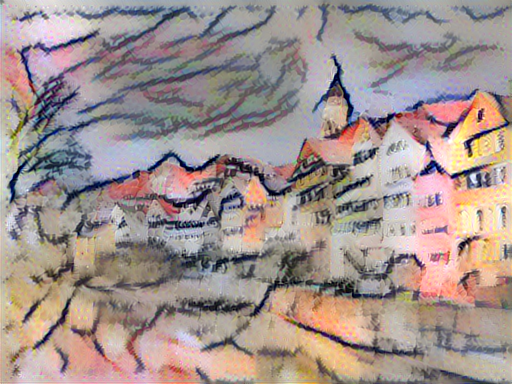

I found that when the eigenvectors are modified by multiplying each element with a random multiplier 0.1 to 1.9, one gets reasonable and not too far-out modifications of the same style.

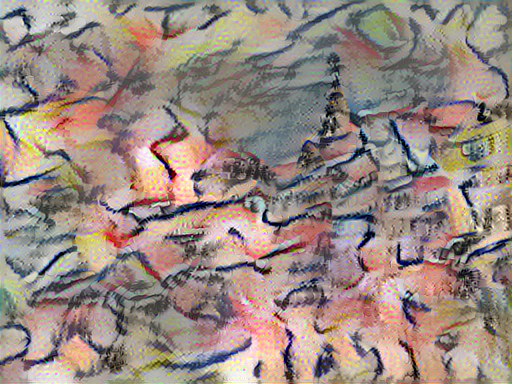

If, on the other hand, the original eigenvectors are replaced by random values, the results are more drastic and somewhat similar to the fantasy image above. The first two examples are variations using the same style weight, and in the last example style weight has been increased and the content image is no longer recognizable.

The code I have used can be downloaded in two versions (note that the code assumes that GPU is being used, because that is what I used)

So far, I have found it easiest to use a single style layer in my experiments, mostly using the layers relu3_x. It appears to me that looking at the variations of style one gets from each layer one also learns something about the peculiar characteristics of each layer. For instance, compare simple more or less straight lines using relu3_2 on the left and the curved lined using relu3_3 on the right: